Object Tracking via Multimodal Sensor System - Tri-Kalman Approach

Robust tracking using tri-Kalman filters and decision-level sensor fusion

Overview

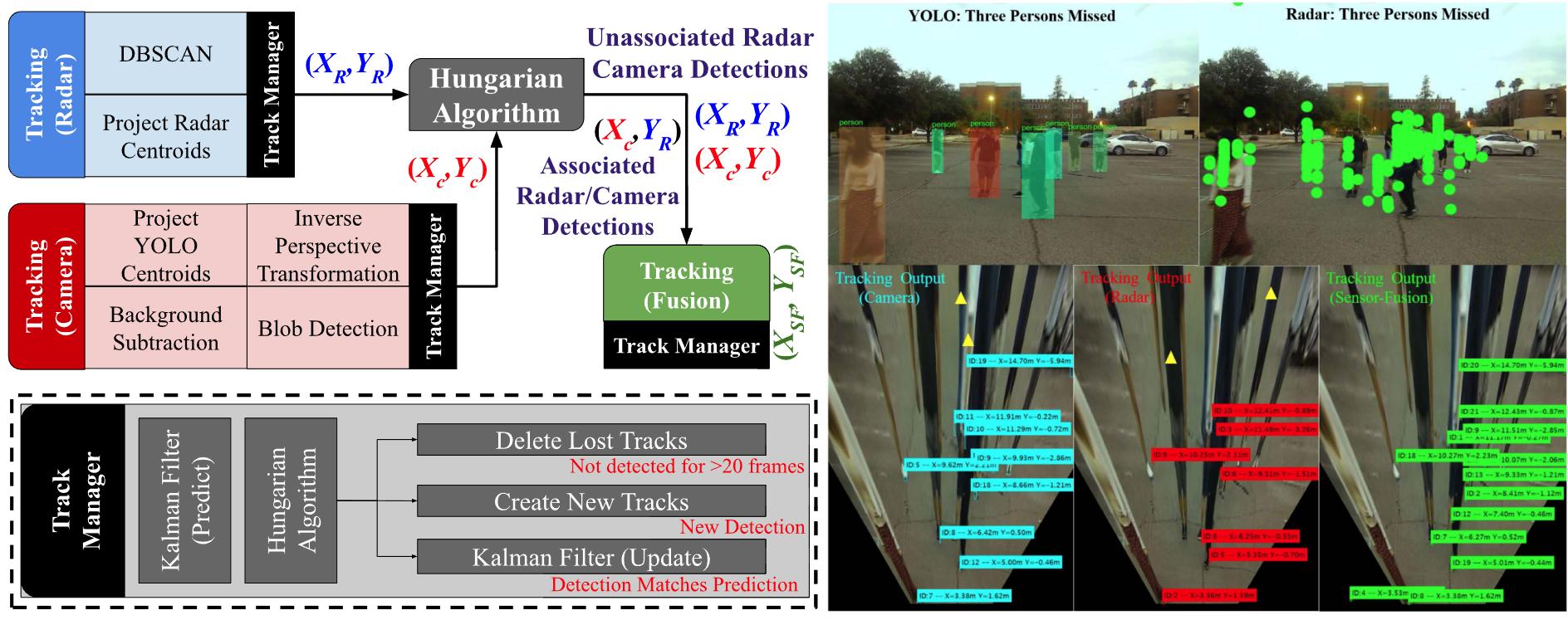

With the recent hike in the autonomous and automotive industries, sensor-fusion based perception has garnered significant attention for multi-object classification and tracking applications. This project presents a robust tracking framework using high-level monocular-camera and millimeter wave radar sensor-fusion. The method aims to improve localization accuracy by leveraging radar's depth and camera's cross-range resolutions using decision-level sensor fusion, and make the system robust by continuously tracking objects despite single sensor failures using a tri-Kalman filter setup. The approach offers promising MOTA and MOTP metrics with significantly low missed detection rates.

Key Highlights

- Sensor Fusion

- Robust Tracking

- Tri-Kalman Filter

- Decision-Level Fusion

- Low Missed Detection

Methodology

Decision-level sensor fusion using tri-Kalman filter framework. Camera intrinsic calibration for bird's-eye view estimation. Hungarian Algorithm for measurement association.

Technologies

Project Details

- Start Date

- September 2020

- End Date

- April 2022

- Status

- Completed

Resources

Related Publications

A. Sengupta, L. Cheng, S. Cao

IEEE Sensors Letters, 2022

View PaperA. Sengupta, F. Jin, S. Cao

IEEE National Aerospace and Electronics Conference (NAECON), 2019

View PaperR. Zhang, S. Cao

IEEE Access, 2019

View PaperR. Zhang, S. Cao

53rd Asilomar Conference on Signals, Systems and Computers, 2019

View Paper