Object Tracking via Multimodal Sensor System - Deep Learning Approach

Bi-LSTM based robust multi-object tracking via radar-camera fusion

Overview

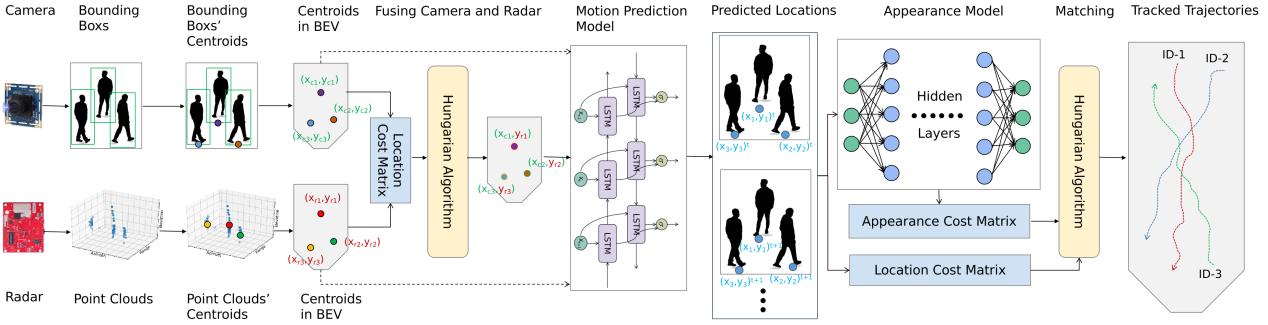

Autonomous driving holds great promise in addressing traffic safety concerns by leveraging artificial intelligence and sensor technology. This project presents a novel deep learning-based method that integrates radar and camera data to enhance the accuracy and robustness of Multi-Object Tracking in autonomous driving systems. The method leverages a Bi-directional Long Short-Term Memory network to incorporate long-term temporal information and improve motion prediction. An appearance feature model inspired by FaceNet establishes associations between objects across different frames. A tri-output mechanism provides robustness against sensor failures and produces accurate tracking results even in low-visibility scenarios.

Key Highlights

- Autonomous Driving

- Multi-Object Tracking

- Bi-LSTM

- Sensor Fusion

- Low-Visibility Robustness

Methodology

Bi-directional LSTM for temporal information and motion prediction. FaceNet-inspired appearance features for object association. Tri-output mechanism for radar, camera, and fusion outputs.

Technologies

Project Details

- Start Date

- September 2024

- Status

- Active

Resources

Related Publications

L. Cheng, A. Sengupta, S. Cao

IEEE Transactions on Intelligent Transportation Systems, 2024

View PaperA. Sengupta, A. Yoshizawa, S. Cao

IEEE Robotics and Automation Letters, 2022

View Paper